If the scientific community took a deep look into the SEO industry, they’d probably laugh so hard that a couple of them would probably die from a stroke.

Despite the fact that the industry is maturing at this point. Most SEO rules are merely based on anecdotal evidence.

… or theories some well followed guru came up with based on a 6 years old Matt Cutts’ tweet.

So we wanted to do what we can to try to level things up and bring actual data to the table, especially after the recent batch of huge updates that came to Google’s core algorithm.

Be kind, this is our first shot at it but we have been building a custom crawler for this post just to analyze 1.1 million search results and share our findings here (wow, just writing this, I can tell this made a looot of business sense).

Here’s a Summary of What We’ve Learned:

- The top position is most likely to be the most relevant result. If there is an obvious result (like a brand), Google will show it, even if it goes against all other ranking factors and SEO rules.

- For less obvious results (i.e. non-branded keyword searches) Featured Snippets are taking over. 50-65% of all number one spots are dominated by a Featured Snippets.

- In other words, this is the area where most SEO competition happens. Google is heading towards more immediate answers and fewer clicks on SERPs.

- Because of these 2 things, lot of the actual SEO competition happens at the second and third place nowadays.

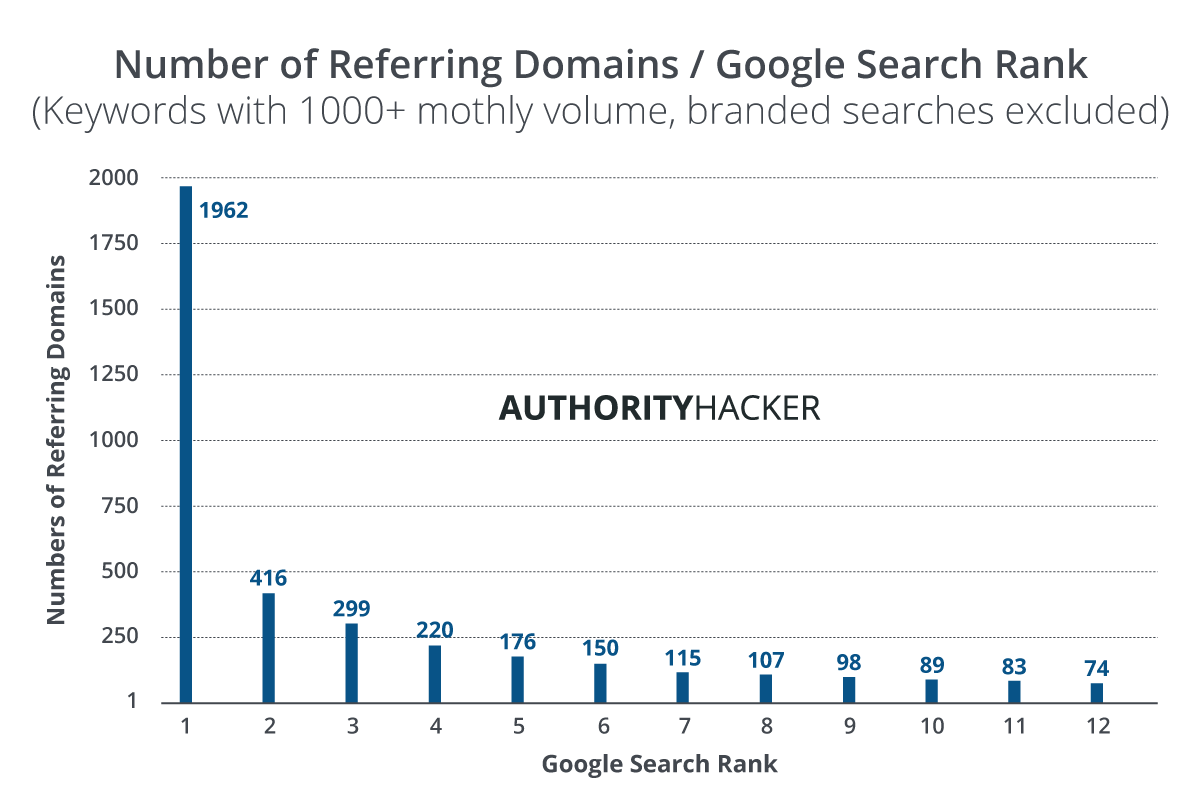

- Backlinks, measured by the number of referring domains to a URL are still the most strongly correlated factor for SEO success.

- Some of the popular link authority metrics like Ahrefs Rank or Domain Rating have shown to be less correlated in our study than we expected.

- Keywords matter. Both the number of keywords in the content and keyword density. Keywords in URL proved somewhat relevant. Keywords in metas, h1 and title tags showed much stronger correlations.

- While longer content does correlate with higher ranks, it’s sensible to think the length is not the factor – rather it provides a room for more keywords to be inserted at a non-spammy density.

- It’s better to optimize for the parent topic (highest volume keyword the top result ranks for) than the actual keyword it covers. All high ranked results dominated the “parent topic” over the keyword they ranked for.

- HTTPS is now a must to rank. No news here, Google made it clear already.

- Some of the SEO hearsay proved completely invalid. For example, the rumor that Google treats high volume keywords differently or that it holds a preference for content with embed YouTube over other video platforms.

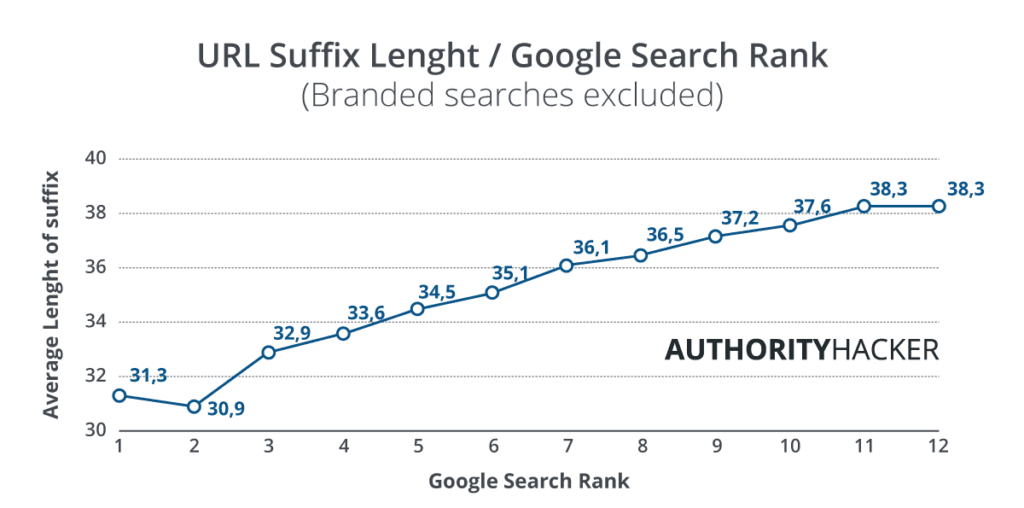

- Some well-established beliefs might just be a result of bad data science in previous studies. For example, the length of the URL being a strong ranking factor.

- All first page results show a high average score (over 90%) for Google’s Lighthouse Audits (SEO), but no link was found between higher scores and top ranks.

- Page Speed seems to help, but not as much as expected. You should want your pages to load fast, for various other reasons anyway.

- Needs further study: Some results on page two mimic the metrics of the top results on page one – there seems to be a thin line between doing everything right and appearing spammy.

Keep reading to learn more details about the findings …

About This Study

In short, for this study, we pulled out 120,000 random keywords from Google Keyword Planner, half of which we made sure get at least 1000 searches a month.

We then got the top 10 SERP results for each and pulled additional data from Ahrefs (such as domain rating), Google APIs, and our own custom-built crawlers.

Overall, we ended with quite a rich dataset of some 1.1 million SERP results. The details of how this study was done and examples of data we worked with can be found here.

Existing Studies, What’s Out There

Naturally, before getting started with this we had a look at what studies have been done in the past. Surprisingly, not so much.

What’s done, either comes out as a study released by one of the SEO data vendors like Ahrefs (who are doing a great job) or a third party analysis of the data donated by vendors, such as this 2016 study by Backlinko, which served as our inspiration for this piece but felt a little outdated given the massive algorithm changes we have had in 2018 and 2019.

Then you have something like Moz’s annual survey of SEO pros, where they report their day-to-day experience. All of these we found valuable to help us get started.

The rest of what you find is based on hearsay, anecdotal evidence, analysis of Matt Cutts’ past blog posts and tweets and recycled findings from the past studies.

Obviously, we were excited to do this.

Limitations and Challenges of Our Study

It was quite an eye-opening experience working with so many data points and we ran into many challenges.

Those were some tricky things that would get results that seemed perfectly fine but were actually invalid or unreliable. The eye-opening part was that a great deal of what we know about SEO may come from such results.

For example, when dealing with such a large set of random keyword data (100,000 in this case) you are going to get a lot of branded keywords there.

That means comparing the results of the first rank with the rest will yield very different results as with branded keywords Google won’t care much about the small SEO factors like HTTPS or page speed when there’s one obvious answer to the query.

As a result, the average of rank one stats will often look very different to everything that ranks below.

This is something that has also shown up in other people’s case studies like Ahrefs or Backlinko. Often the effectiveness of SEO can be seen on the aggregate data from rank two and three results.

Or another example – a great deal has been written about how shorter URLs lead to better ranking and it’s been backed by past studies.

Well, if you work with aggregate results and diverse keywords, it’s more likely the higher ranks will be for the actual homepages, without a suffix, because they’re relevant to specific keywords and would rank for the query anyway, not because they have a short URL.

In other words, for many keywords, you’re more likely to find something like www.website.com among the higher ranks while something like www.website.com/top-ten-something yielding a shorter average length.

We’ve done our best to filter a lot of such statistical noise out.

OK, so here are the actual findings….

1 It’s Still Worth Fighting for the #1 Spot

Ever heard of Pareto Rule? It’s the idea that a great majority of results in any area come from the “vital few” – many internet blogs have written about it.

Well, first of all, Pareto was an economist and the Pareto Rule is an economic concept. And the world of business and economics seems to be dominated by this rule.

For example, 99% of apps in the mobile app stores make no or little money while all the billions go to the top 1%. Or 95% of retail securities market investors barely break even.

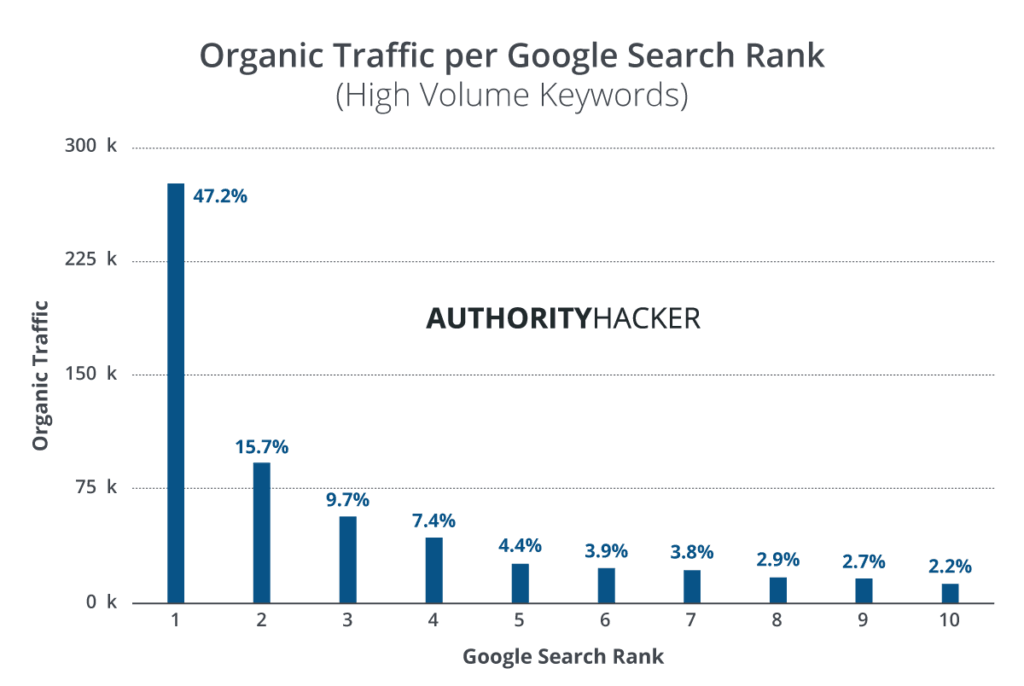

It’s the same with SEO. The number one ranks dominate the market leaving the rest pretty much in the dust.

That is not only due to the fact that the #1 result is #1 for the keyword we looked at. It is also because the #1 result ends up ranking for way more keywords on average.

And its #1 ranking makes it gain more organic links which reinforces its position. It’s a virtuous circle.

From time to time I come across some “alternative facts” and SEO ideas like that you should aim for the spot number two or three in an assumption that most people skip the first result in distrust.

Looking at the organic traffic we obtained from Ahrefs, it doesn’t seem to be the case.

Conclusion: It’s worth fighting for the #1 spot when you are on the first page for a keyword. Often moreso than battling for new keywords if you have a fighting chance.

2 Featured snippets are growing Really fast

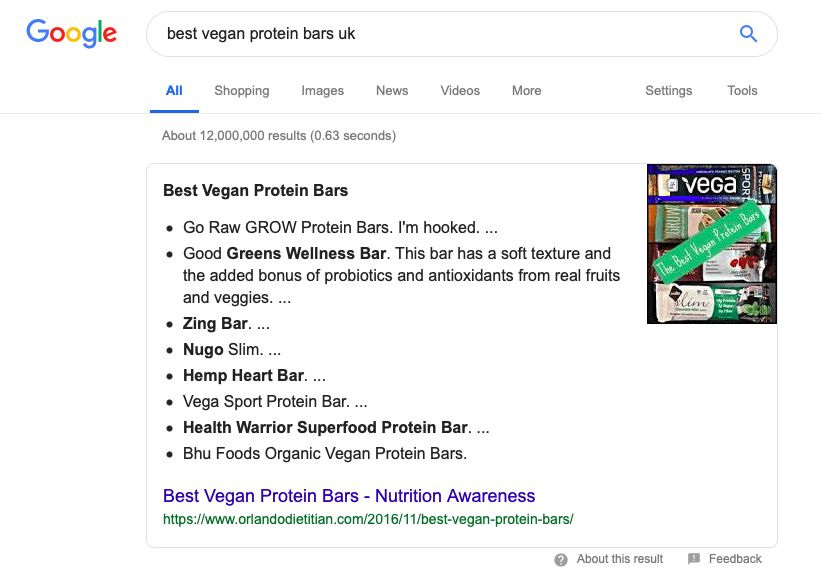

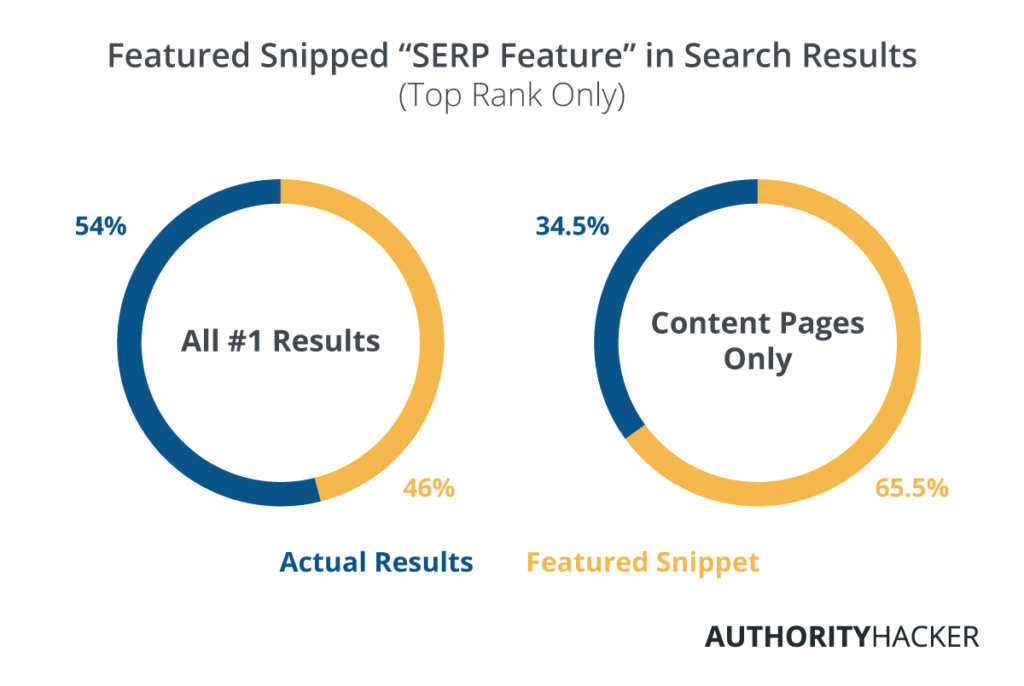

Overall, 46% of the top positions in our study were occupied by a SERP feature. For keywords that get a decent amount of searches, the figure was slightly higher at about 48%.

If you’re an SEO, this is not the most relevant figure.

Basically, with branded keywords, there’s no need for a SERP feature if searching it can yield a very specific result such as a particular Amazon page.

So, after we stripped the results of likely branded queries, the results jumped to 65.5%.

That is a lot more than the 12.29% Ahrefs found in mid 2017 even considering a healthy margin of error.

In other words, for non-branded keywords, the number one rank is getting replaced by an immediate answer.

What this means is that there’s a clear shift towards Google monopolizing the traffic and its users may end up getting immediate answers, never clicking on the links and reducing the overall volume of organic traffic over time.

Our advice here is to optimize your page so you end up in one of those SERP features.

Hubspot did a case study on this and showed that, for large queries, when they did appear in the featured snippets, their page was getting a higher CTR than when they did not.

Ideally, you’d want to occupy the second rank as well as optimize towards attracting traffic in the new SERP feature dominated world. For example, by having a strong title.

Conclusion: We are heading towards featured snippets dominated search results and there is nothing you can do about it. Don’t just optimize with an aim to become one, aim to be the first “classic” organic result as well.

3 HTTPS Matters, But Relevance Beats it

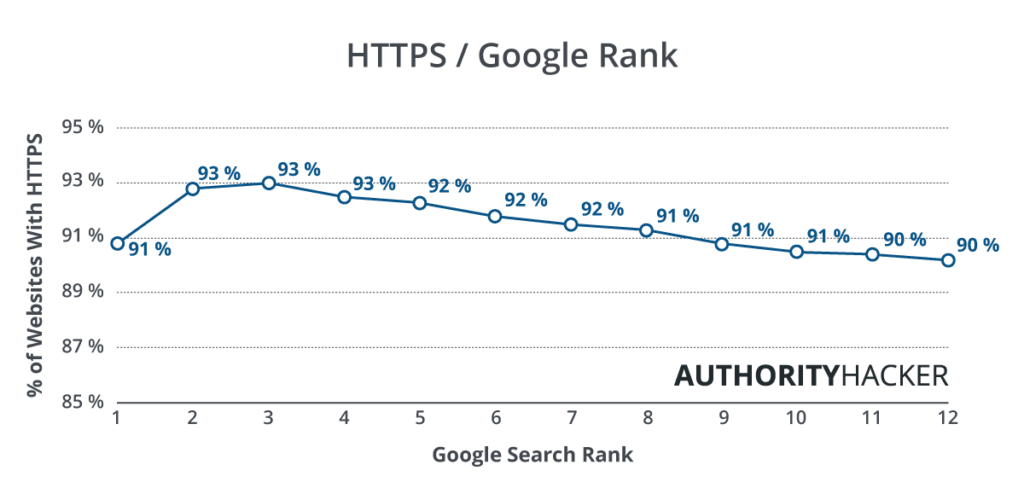

There’s nothing new here, Google has been pushing for HTTPS for quite a while. There’s been a threat of losing ranks and Chrome will literally call your site insecure if you don’t have an active SSL certificate.

So far, well over 90% of the page one results already have HTTPS which shows most sites have now transitioned to SSL and Google is rewarding them for it.

The #1 ranking, however, has a lower correlation most likely due to that relevance factor we mentioned earlier.

That’s simply because if you search something like “child support center” from an IP in Ohio, Google will place the website of the local child support center at the top of the page, regardless of whether they have an SSL certificate or not.

For such queries, we found out that neither HTTPS nor any other SEO factors play a huge role.

Conclusion: Secure your site with HTTPS if you haven’t done it yet. Whatever the results of this study, Google made their direction clear and you will probably suffer the consequences if you delay this more.

4 Backlinks Are Still the #1 Ranking Factor

There’s no news here. Backlinks remain the most important ranking factor.

We measured the total number of referring domains pointing to the URL and that yielded the highest correlations with top ranks.

At this point, we can only talk about quantity and IP diversity being a factor. (I believe Ahrefs has done some more extensive research into backlink profiles, but I couldn’t find it again).

Right now I tend to believe, that when SEOs talk about factors like length of content or presence of an image they may not be factors Google considers at all, but simply happen to correlate with a higher quality content that gets linked to.

We’ve looked at other popular metrics too, like Ahrefs Rank and found no correlation. Domain Rating seemed to be somewhat relevant.

Conclusion: Spend more time building content that gets linked to, instead of reading all those SEO guides and articles.

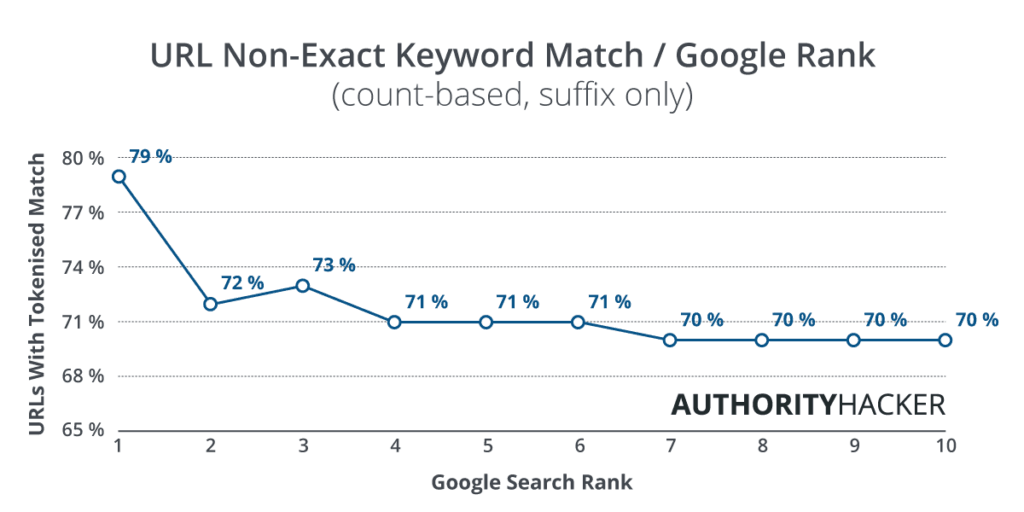

5 URL Length and Keywords in URL

The current belief in the SEO industry is that the length of the URL impacts the rank. The previous studies have also correlated higher search ranks with exact match of the keyword in the URL.

We also found the same correlations but were suspicious about it. It’s just logical that the higher you go in the search ranks the shorter the URL is going to be as you’ll be getting more results for the homepage with no suffix, and short category pages.

When using a large sample of keywords, a few of such results will dramatically impact the average length.

With the presence of keywords, it’s a slightly different story. Take a look at two URL examples below.

- www.mywebsite.com/protein-bars-vegan-athletes

- www.mywebsite.com/vegan-protein/

Let’s say you want to rank for a keyword “vegan protein bars.” The standard advice is to use a suffix like the latter example, i.e. /vegan-protein/.

When looking for an exact match in the URL suffix, in general, we found no correlation.

If we included the root domain, the top few would stand out with higher numbers so it’s sensible to assume the keywords in the root domains (or subdomains) seem to be somewhat relevant despite what Google says.

Now let’s say the keyword we look at is “vegan protein bars.” When we tokenized the keyword as follows “vegan,” “protein,” “bar” and simply counted the occurrence of these we found a much stronger correlation.

With this method, the first, longer URL would be more likely found higher in the search ranks.

Conclusion: I think URL length and keywords in it bear much smaller significance than generally believed. Even with some correlations, looking at all the results we’ve got the case isn’t that convincing.

It might, however, be a good practice to keep it short and concise, but long enough to feature all the important words in high-volume keywords you want to rank for.

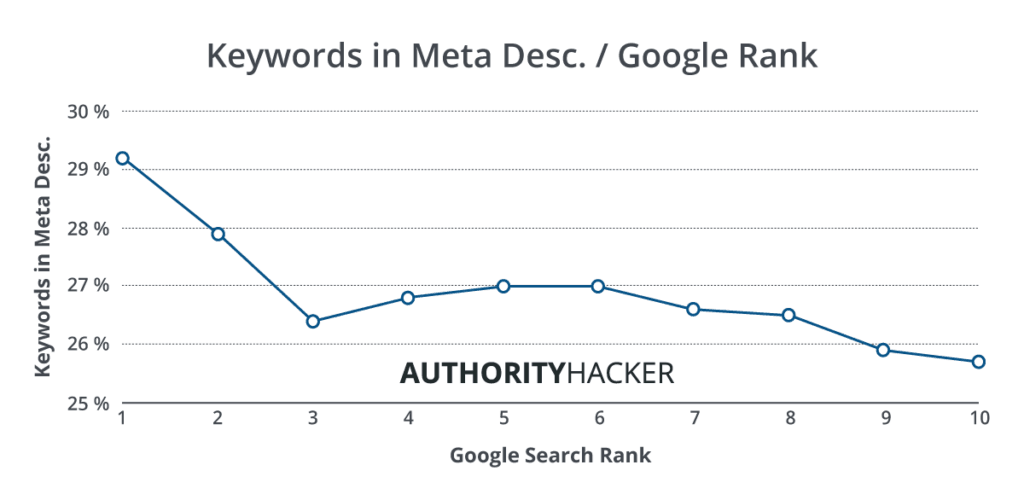

6 Keywords in Meta, Title Tags, H1 Tags, etc.

We managed to crawl 90% of the entire 1.1 million URLs dataset (the other 10% blocked our request or somehow weren’t accessible for crawlers).

We found a much stronger correlation for keywords in meta descriptions, H1 and Title tags than we did in the URL analysis. In fact, we can take it for a fact that Google looks at these, as their Lighthouse audit tool makes it relatively clear that they matter.

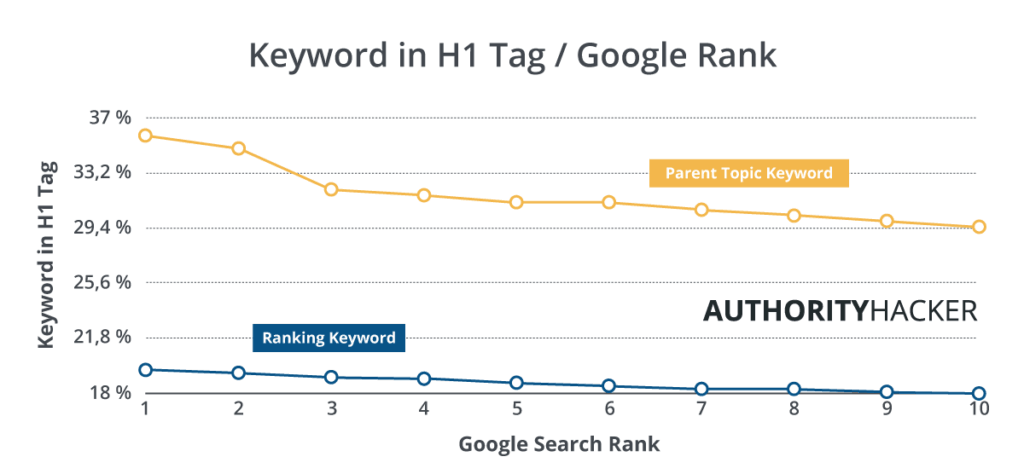

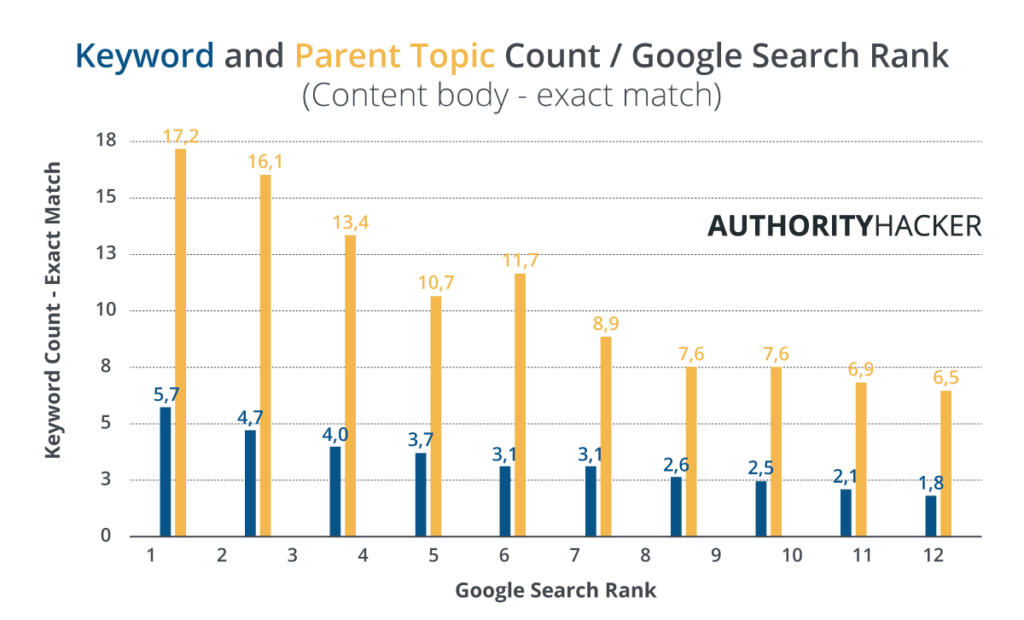

In each case, there was a quite solid correlation for the keyword we got the ranks for, but even stronger for the parent topic.

In fact, parent topic keywords (the highest volume keyword the top result ranks for – read more about this metric here) were as much as twice more likely to show up in these properties.

This encouraged us to look more at the parent topic when looking at keyword presence for the next tests to see just how valuable it is for SEO’s.

One thing that I noticed looking at the actual list of Parent topics though is that they are often shorter tail versions of the keyword we were analyzing which explains why they often beat the actual keyword in correlation.

Conclusion: Optimize these properties for your keyword, but don’t forget to aim at the parent topic in the first place. We measured exact match in this analysis by the way.

7 Content-Length and Content Keywords

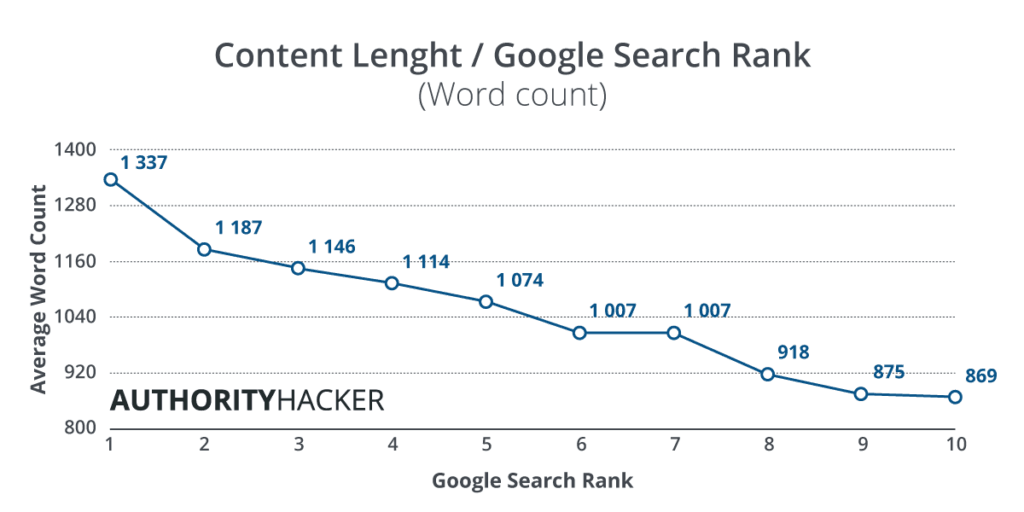

There’s a strong belief in the SEO industry that longer content delivers higher ranks, or that Google somehow prefers long-form content.

There’s also a general belief that the optimal content length for SEO is at around 2000 words. While the study did find the correlation for content length, I tend to disagree with the general view.

First of all, any bigger study will have a hard time to precisely assess the length of content, unless done manually. Web scrapers tend to grab elements that don’t belong in the word count and inflate the numbers.

Things like comment sections, side navigation and author boxes.

Other times, the studies published on this topic would come from software companies that have their business in content marketing and thus they’ll be biased to tell you that longer content is better.

For this study, we tried a bunch of different solutions and finally settled on one built around Readability library that powers the reader view in Firefox browser as it was by far the best at isolating content and removing navigation, comments etc.

While we found a beautiful correlation for word count the overall content length was shorter than generally believed once you strip much of the noise more generic scrappers tend to pick up.

But here’s the most exciting finding.

Keyword count showed beautiful correlation too. On average, we found the keyword appeared in the readable text six times.

What’s more exciting is that the parent topic would appear in the readable part of the text almost 3 times as more often as the keyword we pulled the SERPs for.

Another interesting thing is that the density of keywords correlates too, in spite of higher ranks having a longer average word count.

Conclusion: What that leaves us with, is that it may not be the length of content that affects your SEO as much as it allows you to show Google more keywords while maintaining some credible density.

In other words, if you don’t optimize your content for keywords, the length of content won’t help much. (Unless it’s so good that the sheer quantity of its value makes more sites linking to you).

8 Lighthouse Audit and PageSpeed Insights

Finally, we wanted to have a look at page speed and whatever else was accessible using Google’s API tools.

A great deal has been said about how speed is critical for SEO.

Some correlations were found in the past, but they usually came from sources like Alexa. I assume because it’s the cheapest and the easiest way to get some additional data.

We considered Alexa too, but many users report inaccurate data, so we turned to Google’s own PageSpeed Insights tool, a much costlier way to obtain data.

This tool gives you an overall score for a URL, looking at a number of factors, including speed, overall performance, SEO best practices, and mobile optimization.

A few months ago, it has been updated with the latest version of the Lighthouse audit tool. It contains an SEO audit which looks at the following points and scores them:

- Document has a valid `rel=canonical`’

- Document has a `

While we learned that on average over 90% of all pages comply with all points, there was no correlation between the high rank and higher scores. In fact, it seemed slightly inverted.

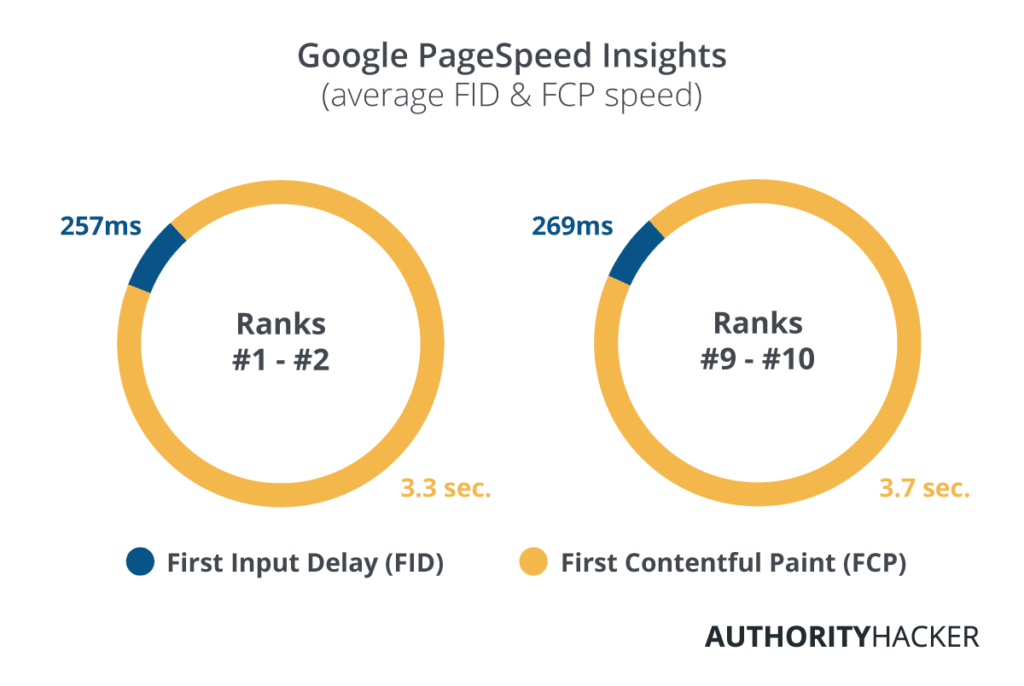

Then we looked at the page speed results. We looked at:

- First Input Delay (FID): the time from when a user first interacts with your site to the time when the browser is able to respond.

- First Contentful Paint (FCP): the time from navigation to the time when the browser renders the first bit of content from the DOM.

- … and overall speed.

The results are that the top ranked pages are slightly faster, but most scored AVERAGE or SLOW overall, and AVERAGE for FID and FCP.

Conclusion: It seems to be a good practice to optimize with the updated PageSpeed Insights, but don’t expect miracles for your rankings and don’t sacrifice premium user experience and functionalities for a few points of pagespeed.

Obviously, there are numerous other reasons why you’d want your page to be fast – such as better conversions and lower bounce rates.

#9 Other Observations

All of the above are just the interesting or mention-worthy findings we obtained from the study. However, we looked at a bunch of things while conducting it.

For example, we wanted to see if the presence of a video in the content has any impact on SERPs or whether it’s true that Google prefers pages using YouTube to other video platforms.

None of these assumptions proved valid. At least not in our analysis.

We also assumed the rumor that Google runs a different algorithm for high-volume keywords is true, and ran each analysis on different volumes separately, only to find similar results every time.

Finally, the most interesting thing to see, and something Authority Hacker observed before too, often time the top results on page two seem to show similar metrics to actual top ranked pages.

For example, similar keyword density, Ahrefs Domain Rank, HTTPS prevalence, and so on. Yet, they’ve never made it to page one.

It seems, there are some credibility issues (maybe EAT?) or a thin line between doing things right and coming across as spammy exists in Google’s algorithm.

Final Conclusion

After looking at these results, I think a lot of people have the interest in making SEO seem overly complicated to maintain a guru status.

I do believe a lot of the little technicalities like keyword in url or page speed are important for extremely competitive queries where 1% advantages can make the difference.

But in most cases, they are not as impactful as most people think.

The study has proved once again that Google does a great job at establishing relevance and when there’s a clear choice it pays little attention to the technical aspects of SEO.

You could already observe this in Backlinko’s study from 2016 – notice how the first rank always shows lower scores in most charts.

For all the content where SEO does play a role, I think the answer is straightforward. Do your keyword research, build a unique, highly-valuable piece of content and promote it so you get links.

Have a clean site both from the technical perspective and user experience perspective and you should be good to go.

It gets a bit more challenging when your sites become very large and you need to organise content in a logical way.

But once again, this does not apply to most site owners.

People tend to believe Google is deploying some overly sophisticated NLP and AI algorithms to establish which pages to push higher and which to penalize.

I rather think it does a great job at collecting, sorting and organizing incredible amounts of very basic data (like keywords occurences and links), finding patterns in it and drawing conclusions.

So I’d focus on those simple things.

But that’s my personal opinion based on what I know about the current state of NLP and AI technologies – they’re way more primitive than people think they are.

The results from this study have only solidified this opinion.

Here’s the link to our research methods in case you want more details, or would like to replicate this study.